Building AI Agent with llama.cpp

llama.cpp is the original, high-performance framework that powers many popular local AI tools, including Ollama, local chatbots, and other on-device LLM solutions. By working directly with llama.cpp, you can minimize overhead, gain fine-grained control, and optimize performance for your specific hardware, making your local AI agents and applications faster and more configurable

In this tutorial, I will guide you through building AI applications using llama.cpp, a powerful C/C++ library for running large language models (LLMs) efficiently. We will cover setting up a llama.cpp server, integrating it with Langchain, and building a ReAct agent capable of using tools like web search and a Python REPL.

1. Setting up the llama.cpp Server

This section covers the installation of llama.cpp and its dependencies, configuring it for CUDA support, building the necessary binaries, and running the server.

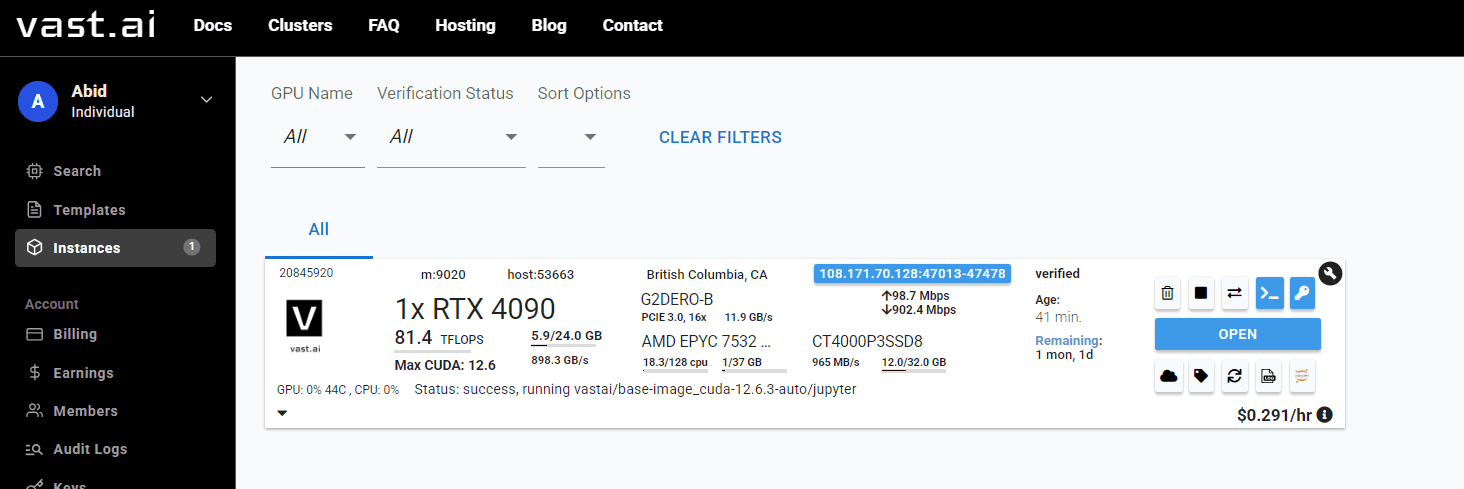

Note: we are using an NVIDIA RTX 4090 graphics card running on a Linux operating system with the CUDA toolkit pre-configured. If you don't have access to similar local hardware, you can rent GPU instances from Vast.ai for a cheaper price.

```

apt-get update

apt-get install pciutils build-essential cmake curl libcurl4-openssl-dev git -y

```

```

Clone llama.cpp repository

git clone https://github.com/ggml-org/llama.cpp

Configure build with CUDA support

cmake llama.cpp -B llama.cpp/build \

-DBUILDSHAREDLIBS=OFF \

-DGGML_CUDA=ON \

-DLLAMA_CURL=ON

```

```

Build all necessary binaries including server

cmake --build llama.cpp/build --config Release -j --clean-first

Copy all binaries to main directory

cp llama.cpp/build/bin/* llama.cpp/

```

```

./llama.cpp/llama-server \

-hf unsloth/gemma-3-4b-it-GGUF:Q4KXL \

--host 0.0.0.0 \

--port 8000 \

--n-gpu-layers 999 \

--ctx-size 8192 \

--threads $(nproc) \

--temp 0.6 \

--cache-type-k q4_0 \

--jinja

```

```

(main) root@C.20841134:/workspace$ curl -X POST http://localhost:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"messages": [

{"role": "user", "content": "Hello! How are you today?"}

],

"max_tokens": 150,

"temperature": 0.7

}'

```

Output:

```

{"choices":[{"finishreason":"length","index":0,"message":{"role":"assistant","content":"\nOkay, user greeted me with a simple "Hello! How are you today?" \n\nHmm, this seems like a casual opening. The user might be testing the waters to see if I respond naturally, or maybe they genuinely want to know how an AI assistant conceptualizes \"being\" but in a friendly way. \n\nI notice they used an exclamation mark, which feels warm and possibly playful. Maybe they're in a good mood or just trying to make conversation feel less robotic. \n\nSince I don't have emotions, I should clarify that gently but still keep it warm. The response should acknowledge their greeting while explaining my nature as an AI. \n\nI wonder if they're asking because they're curious about AI consciousness, or just being polite"}}],"created":1749319250,"model":"gpt-3.5-turbo","systemfingerprint":"b5605-5787b5da","object":"chat.completion","usage":{"completiontokens":150,"prompttokens":9,"totaltokens":159},"id":"chatcmpl-jNfif9mcYydO2c6nK0BYkrtpNXSnseV1","timings":{"promptn":9,"promptms":65.502,"promptpertokenms":7.278,"promptpersecond":137.40038472107722,"predictedn":150,"predictedms":1207.908,"predictedpertokenms":8.052719999999999,"predictedper_second":124.1816429728092}}

```

2. Building an AI Agent with Langgraph and llama.cpp

Now, let's use Langgraph and Langchain to interact with the llama.cpp server and build a multi tool AI agent.

- Set your Tavily API key for search capabilities.

- For Langchain to work with the local llama.cpp server (which emulates an OpenAI API), you can set OPENAIAPIKEY to local or any non-empty string, as the base_url will direct requests locally.

```

export TAVILYAPIKEY="yourapikey_here"

export OPENAIAPIKEY=local

```

```

%%capture

!pip install -U \

langgraph tavily-python langchain langchain-community langchain-experimental langchain-openai

```

```

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(

model="unsloth/gemma-3-4b-it-GGUF:Q4KXL",

temperature=0.6,

base_url="http://localhost:8000/v1",

)

```

- TavilySearchResults: Allows the agent to search the web.

- PythonREPLTool: Provides the agent with a Python Read-Eval-Print Loop to execute code.

```

from langchain_community.tools import TavilySearchResults

from langchain_experimental.tools.python.tool import PythonREPLTool

searchtool = TavilySearchResults(maxresults=5, include_answer=True)

code_tool = PythonREPLTool()

tools = [searchtool, codetool]

```

```

from langgraph.prebuilt import createreactagent

agent = createreactagent(

model=llm,

tools=tools,

)

```

3. Test the AI Agent with Example Queries

Now, we will test the AI agent and also display which tools the agent uses.

```

def extracttoolnames(conversation: dict) -> list[str]:

tool_names = set()

for msg in conversation.get('messages', []):

calls = []

if hasattr(msg, 'tool_calls'):

calls = msg.tool_calls or []

elif isinstance(msg, dict):

calls = msg.get('tool_calls') or []

if not calls and isinstance(msg.get('additional_kwargs'), dict):

calls = msg['additionalkwargs'].get('toolcalls', [])

else:

ak = getattr(msg, 'additional_kwargs', None)

if isinstance(ak, dict):

calls = ak.get('tool_calls', [])

for call in calls:

if isinstance(call, dict):

if 'name' in call:

tool_names.add(call['name'])

elif 'function' in call and isinstance(call['function'], dict):

fn = call['function']

if 'name' in fn:

tool_names.add(fn['name'])

return sorted(tool_names)

```

```

def run_agent(question: str):

result = agent.invoke({"messages": [{"role": "user", "content": question}]})

raw_answer = result["messages"][-1].content

toolsused = extracttool_names(result)

return toolsused, rawanswer

```

```

tools, answer = run_agent("What are the top 5 breaking news stories?")

print("Tools used ➡️", tools)

print(answer)

```

Output:

```

Tools used ➡️ ['tavilysearchresults_json']

Here are the top 5 breaking news stories based on the provided sources:

- Gaza Humanitarian Crisis: Ongoing conflict and challenges in Gaza, including the Eid al-Adha holiday, and the retrieval of a Thai hostage's body.

- Russian Drone Attacks on Kharkiv: Russia continues to target Ukrainian cities with drone and missile strikes.

- Wagner Group Departure from Mali: The Wagner Group is leaving Mali after heavy losses, but Russia's Africa Corps remains.

- Trump-Musk Feud: A dispute between former President Trump and Elon Musk could have implications for Tesla stock and the U.S. space program.

```

```

tools, answer = run_agent(

"Write a code for the Fibonacci series and execute it using Python REPL."

)

print("Tools used ➡️", tools)

print(answer)

```

Output:

```

Tools used ➡️ ['Python_REPL']

The Fibonacci series up to 10 terms is [0, 1, 1, 2, 3, 5, 8, 13, 21, 34].

```

최종 생각

In this guide, I have used a small quantized LLM, which sometimes struggles with accuracy, especially when it comes to selecting the tools. If your goal is to build production-ready AI agents, I highly recommend running the latest, full-sized models with llama.cpp. Larger and more recent models generally provide better results and more reliable outputs

It's important to note that setting up llama.cpp can be more challenging compared to user-friendly tools like Ollama. However, if you are willing to invest the time to debug, optimize, and tailor llama.cpp for your specific hardware, the performance gains and flexibility are well worth it.

One of the biggest advantages of llama.cpp is its efficiency: you don't need high-end hardware to get started. It runs well on regular CPUs and laptops without dedicated GPUs, making local AI accessible to almost everyone. And if you ever need more power, you can always rent an affordable GPU instance from a cloud provider.